Factorized Motion Fields for Fast Sparse Input Dynamic View Synthesis

SIGGRAPH, 2024

Nagabhushan Somraj, Kapil Choudhary, Sai Harsha Mupparaju and Rajiv Soundararajan

Indian Institute of Science

Abstract

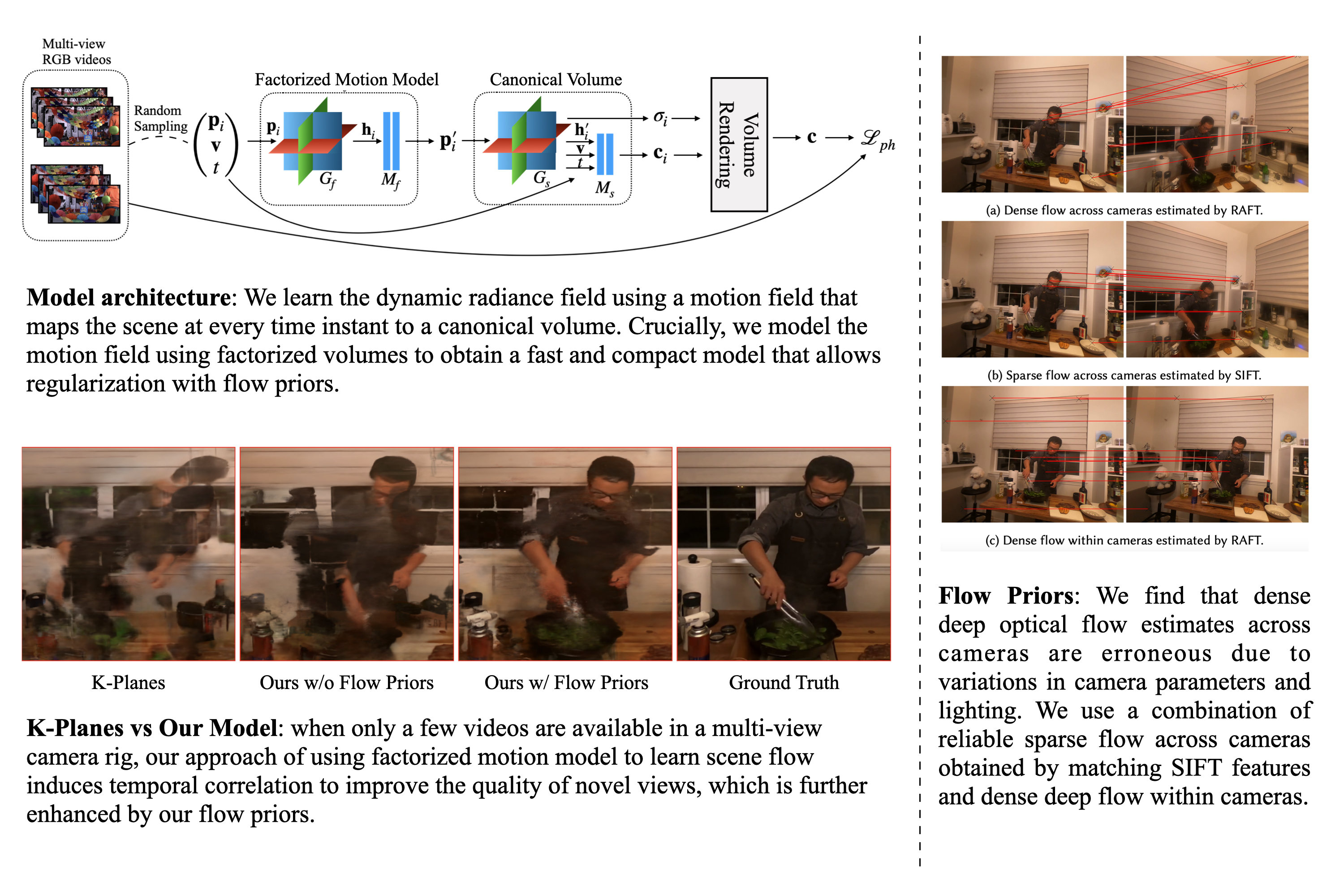

Designing a 3D representation of a dynamic scene for fast optimization and rendering is a challenging task. While recent explicit representations enable fast learning and rendering of dynamic radiance fields, they require a dense set of input viewpoints. In this work, we focus on learning a fast representation for dynamic radiance fields with sparse input viewpoints. However, the optimization with sparse input is under-constrained and necessitates the use of motion priors to constrain the learning. Existing fast dynamic scene models do not explicitly model the motion, making them difficult to be constrained with motion priors. We design an explicit motion model as a factorized 4D representation that is fast and can exploit the spatio-temporal correlation of the motion field. We then introduce reliable flow priors including a combination of sparse flow priors across cameras and dense flow priors within cameras to regularize our motion model. Our model is fast, compact and achieves very good performance on popular multiview dynamic scene datasets with sparse input viewpoints.Sample comparison videos

Play the videos in the fullscreen mode for the best view

Comparison with Competing Models

K-Planes vs RF-DeRF

Depth Priors vs Flow Priors

Cross-Camera Dense Flow Priors vs Our Priors

Comparisons with Ablated Models

without Sparse Flow Priors

without Dense Flow Priors

With Dense Input Views

N3DV Dataset

InterDigital Dataset

Citation

If you use our work, please cite our paper:

Nagabhushan Somraj, Kapil Choudhary and Sai Harsha Mupparaju and Rajiv Soundararajan,

"Factorized Motion Fields for Fast Sparse Input Dynamic View Synthesis",

In Proceedings of the ACM Special Interest Group on Computer Graphics and Interactive Techniques (SIGGRAPH),

Jul 2024, doi: 10.1145/3641519.3657498.

Bibtex:

@inproceedings{somraj2024rfderf,

title = {Factorized Motion Fields for Fast Sparse Input Dynamic View Synthesis},

author = {Somraj, Nagabhushan and Choudhary, Kapil and Mupparaju, Sai Harsha and Soundararajan, Rajiv},

booktitle = {ACM Special Interest Group on Computer Graphics and Interactive Techniques (SIGGRAPH)},

month = {July},

year = {2024},

doi = {10.1145/3641519.3657498}

}

title = {Factorized Motion Fields for Fast Sparse Input Dynamic View Synthesis},

author = {Somraj, Nagabhushan and Choudhary, Kapil and Mupparaju, Sai Harsha and Soundararajan, Rajiv},

booktitle = {ACM Special Interest Group on Computer Graphics and Interactive Techniques (SIGGRAPH)},

month = {July},

year = {2024},

doi = {10.1145/3641519.3657498}

}